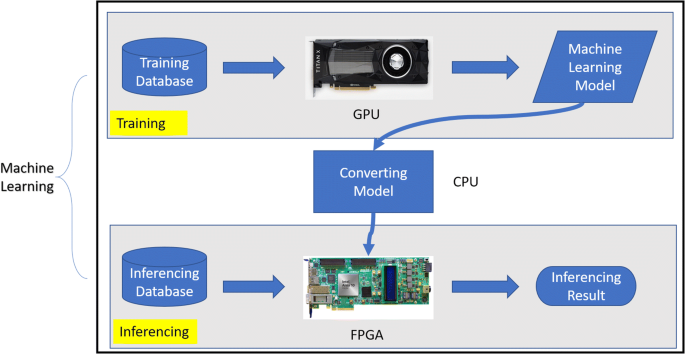

A hybrid GPU-FPGA based design methodology for enhancing machine learning applications performance | SpringerLink

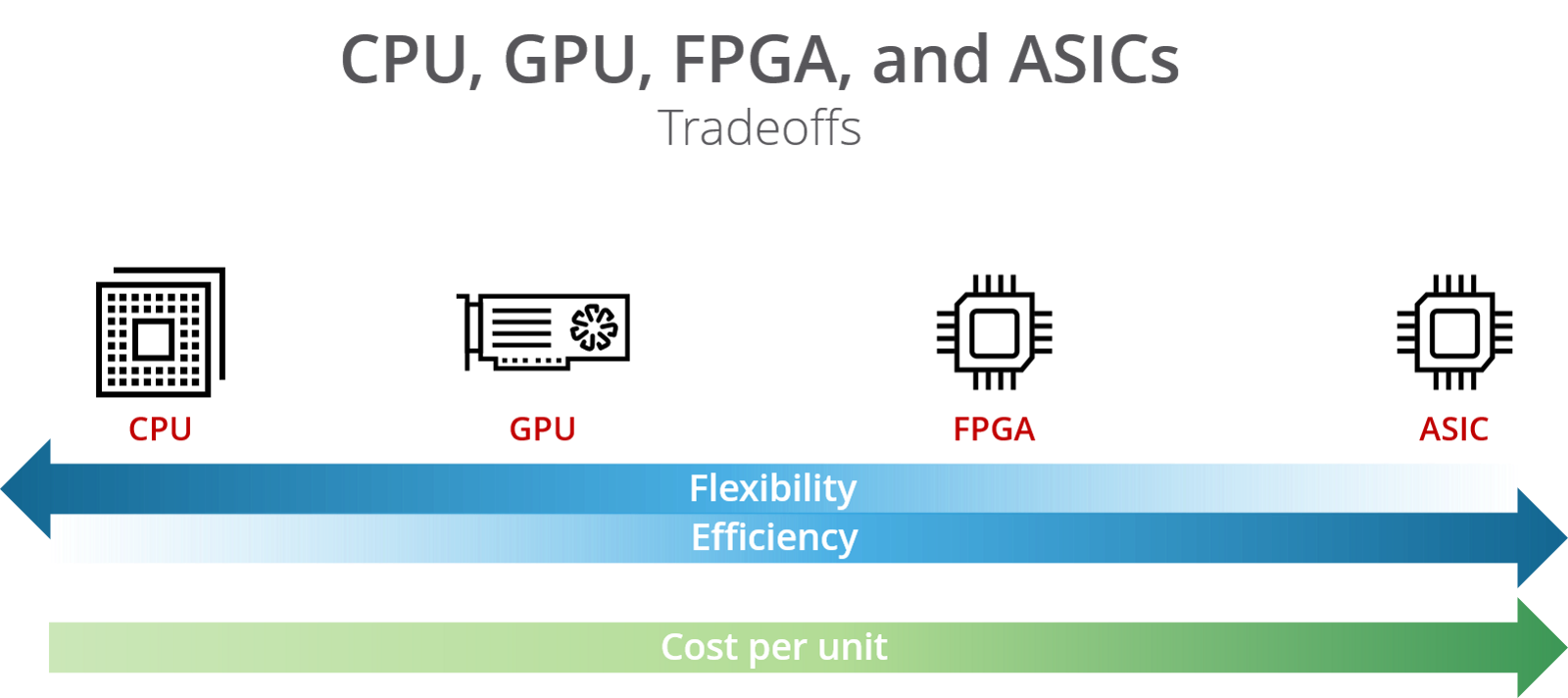

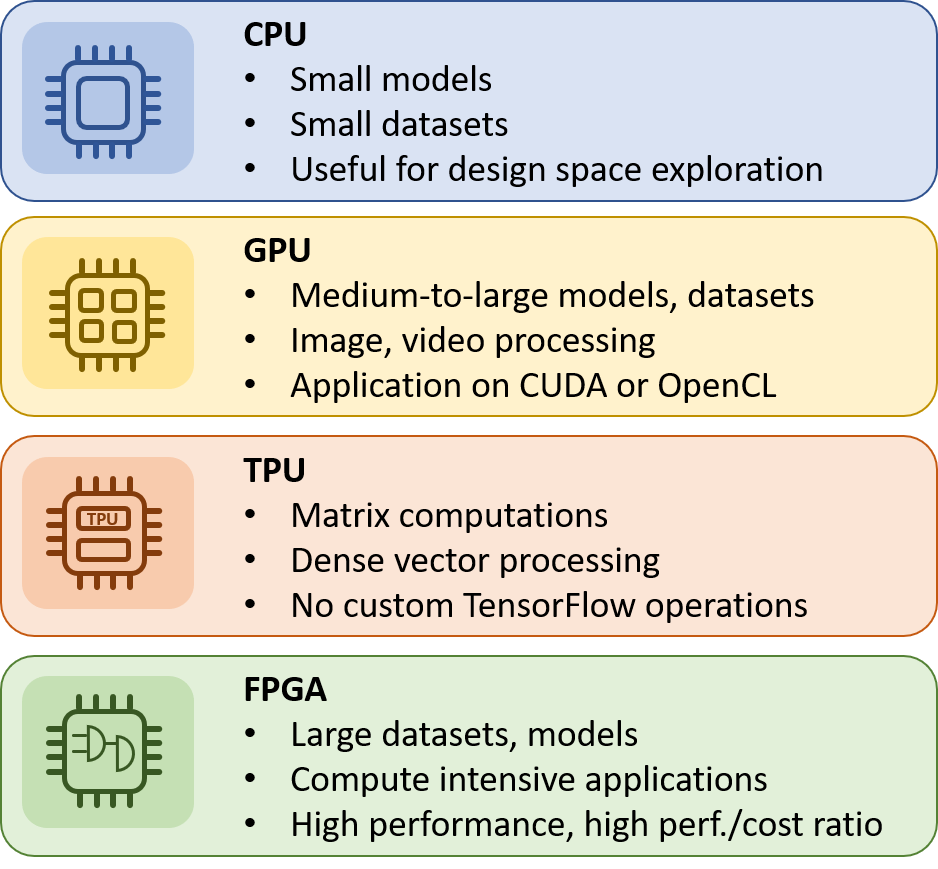

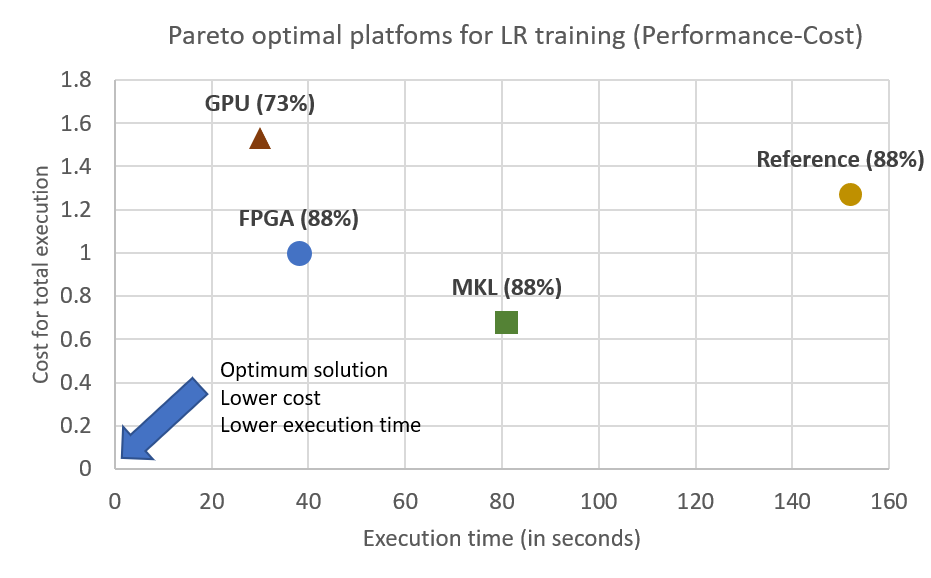

CPU, GPU or FPGA: Performance evaluation of cloud computing platforms for Machine Learning training – InAccel

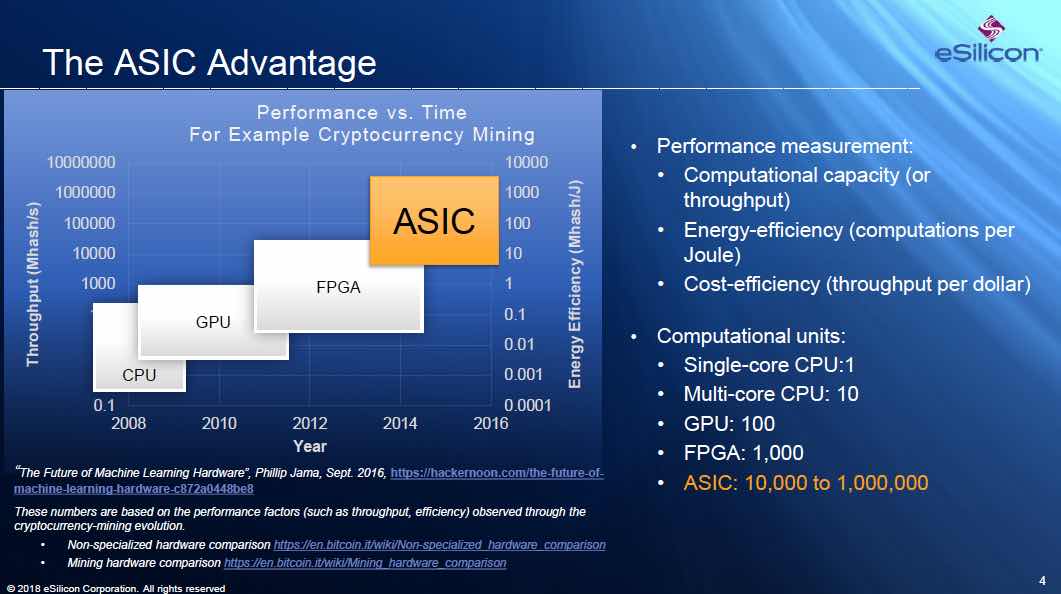

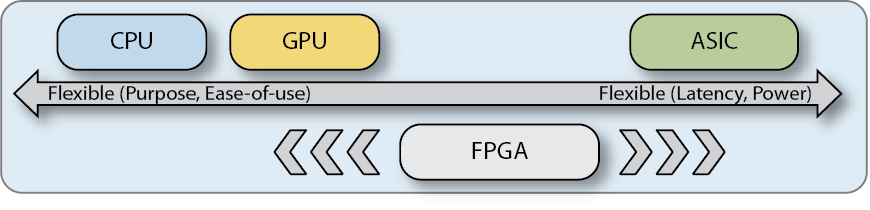

Cryptocurrency Mining: Why Use FPGA for Mining? FPGA vs GPU vs ASIC Explained | by FPGA Guide | FPGA Mining | Medium

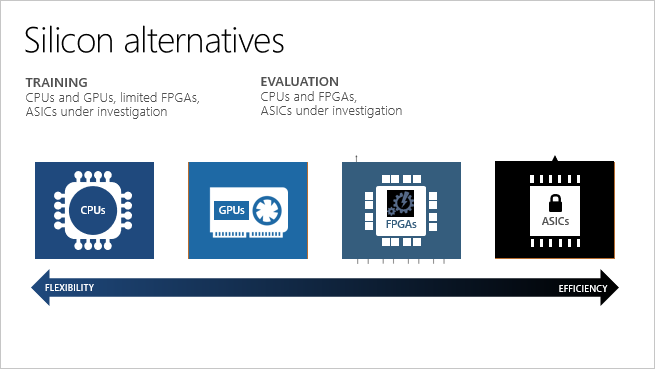

Next-Generation AI Hardware needs to be Flexible and Programmable | Achronix Semiconductor Corporation

Mipsology Zebra on Xilinx FPGA Beats GPUs, ASICs for ML Inference Efficiency - Embedded Computing Design

ASIC Clouds: Specializing the Datacenter for Planet-Scale Applications | July 2020 | Communications of the ACM

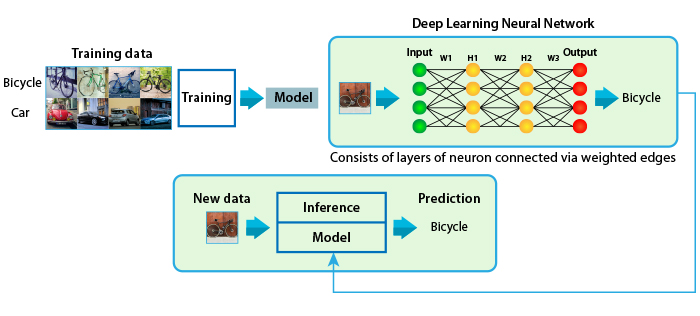

AI Accelerators and Machine Learning Algorithms: Co-Design and Evolution | by Shashank Prasanna | Aug, 2022 | Towards Data Science